If you’re wondering how to optimize WordPress robots.txt file for better SEO, you’ve come to the right place.

In this quick guide, I’ll explain what a robots.txt file is, why it’s important to improve your search rankings, and how to make edits to it and submit it to Google.

Let’s dive in!

What is a WordPress robots.txt file and do I need to worry about it?

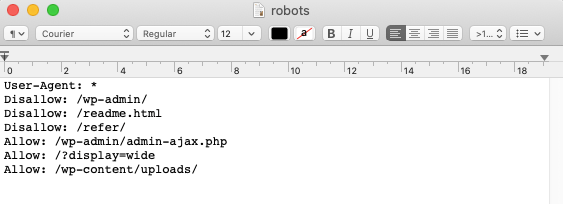

A robots.txt file is a file on your site that allows you to deny search engines access to certain files and folders. You can use it to block Google’s (and other search engines) bots from crawling certain pages on your site. Here’s an example of the file:

So how does denying access to search engines actually improve your SEO? Seems counterintuitive…

It works like this: The more pages on your site, the more pages Google has to crawl.

For example, if you have a lot of category and tag pages on your blog, these pages are low-quality and don’t need to be crawled by search engines; they just consume your site’s crawl budget (the allotted number of pages Google will crawl on your site at any given time).

Crawl budget is important because it determines how quickly Google picks up on your site’s changes – and thus how quickly you’re ranked. It can especially help with eCommerce SEO!

Just be careful to do this right, as it can harm your SEO if done poorly. For more info on how to properly no-index the right pages, check out this guide by DeepCrawl.

So, do you need to mess with your WordPress robots.txt file?

If you’re in a highly competitive niche with a large site, probably. If you’re just starting your first blog, however, building links to your content and creating lots of high-quality articles are bigger priorities.

How to optimize WordPress robots.txt file for better SEO

Now, let’s discuss how to actually get to (or create) and optimize WordPress robots.txt file.

Robots.txt usually resides in your site’s root folder. You will need to connect to your site using an FTP client or by using your cPanel’s file manager to view it. It’s just an ordinary text file that you can then open with Notepad.

If you don’t have a robots.txt file in your site’s root directory, then you can create one. All you need to do is create a new text file on your computer and save it as robots.txt. Then, just upload it to your site’s root folder.

What does an ideal robots.txt file look like?

The format for a robots.txt file is really simple. The first line usually names a user agent. The user agent is the name of the search bot you’re trying to communicate with. For example, Googlebot or Bingbot. You can use asterisk * to instruct all bots.

The next line follows with Allow or Disallow instructions for search engines, so they know which parts you want them to index, and which ones you don’t want to be indexed.

Here’s an example:

User-Agent: *

Allow: /?display=wide

Allow: /wp-content/uploads/

Disallow: /readme.html

Disallow: /refer/

Sitemap: http://www.codeinwp.com/post-sitemap.xml

Sitemap: http://www.codeinwp.com/page-sitemap.xml

Sitemap: http://www.codeinwp.com/deals-sitemap.xml

Sitemap: http://www.codeinwp.com/hosting-sitemap.xmlNote that if you’re using a plugin like Yoast or All in One SEO, you may not need to add the sitemap section, as they try to do so automatically. If it fails, you can add it manually like in the example above.

What should I disallow or noindex?

In Google’s webmaster guidelines, they advise webmasters not to use their robots.txt file to hide low-quality content. Thus, using your robots.txt file to stop Google from indexing your category, date, and other archive pages may not be a wise choice.

Remember, the purpose of robots.txt is to instruct bots what to do with the content they crawl on your site. It doesn’t stop them from crawling your website.

Also, you don’t need to add your WordPress login page, admin directory, or registration page to robots.txt because login and registration pages have the noindex tag added automatically by WordPress.

I do recommend, however, that you disallow the readme.html file in your robots.txt file. This readme file can be used by someone trying to figure out which version of WordPress you’re using. If this is a person, they can easily access the file by just browsing to it. Plus, putting a disallow tag in can block malicious attacks.

How do I submit my WordPress robots.txt file to Google?

Once you’ve updated or created your robots.txt file, you can submit it to Google using Google Search Console.

However, I recommend testing it first using Google’s robots.txt testing tool.

If you don’t see the version you created here, you’ll have to re-upload the robots.txt file you created to your WordPress site. You can do this using Yoast SEO.

Conclusion

You now know how to optimize WordPress robots.txt file for better SEO.

Remember to be careful when making any major changes to your site via robots.txt. While these changes can improve your search traffic, they can also do more harm than good if you’re not careful.

And if you’re dying for more learning, check out our ultimate roundup of WordPress tutorials!

Let us know in the comments; do you have any questions on how to optimize WordPress robots.txt file? What kind of impact did taking care of it have on your search rankings?

Or start the conversation in our Facebook group for WordPress professionals. Find answers, share tips, and get help from other WordPress experts. Join now (it’s free)!